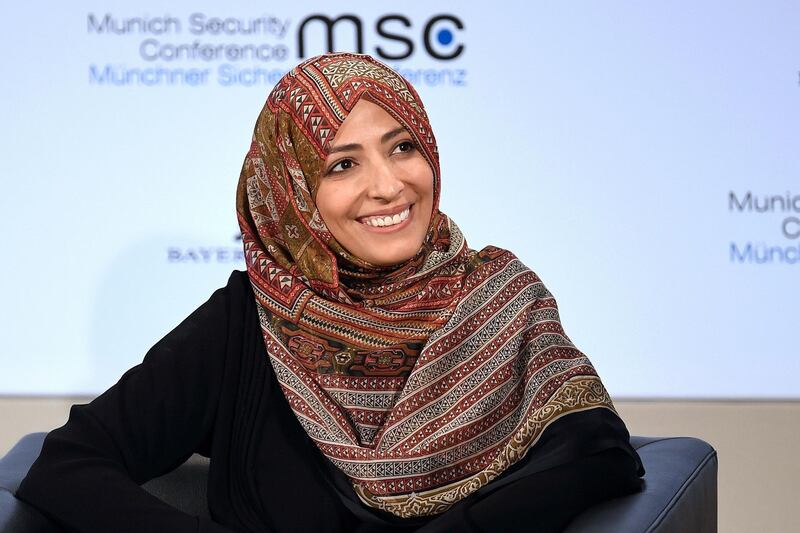

Facebook's Oversight Board has said it conducted vetting of the Yemeni activist Tawakkol Karman prior to her appointment as a member of its panel but the company refused to outline in detail the individual merits of her appointment.

Ms Karman is a sympathiser of the Muslim Brotherhood who played a prominent role in the country's Islah Party as it organised protests against the government of Ali Abdullah Saleh almost a decade ago.

Inclusion of the campaigner in the 20-member group, which has a $130 million budget to adjudicate on the content moderation decisions of the under-pressure social media network, was an outlier among the board of former politicians, lawyers, academics and ex-journalists.

Analysts have expressed concerns that someone with ties to an extremist organisation that is associated with the very content that Facebook is regularly requested to remove compromises the oversight project from the outset.

In a statement to The National, the Oversight Board said Ms Karman's appointment had undergone its standard scrutiny procedures. Facebook's founder Mark Zuckerberg established the board after US and international pressure to stem the tide of recruitment and propaganda across Facebook and its other platforms.

It said a shortlist of candidates had been chosen for familiarity with digital content, decision-making skills, ability to exercise independent judgement and reflect gender and geographic balance. Four co-chairs, including the former Danish prime minister Helle Thorning-Schmidt, then carried out interviews with the prospective members.

"All Members underwent significant vetting, agreed to a code of conduct, and have a deeply held commitment to freedom of expression and human rights, including addressing questions relating to censorship," the statement said. "The code of conduct, which all Board Members must uphold when undertaking case deliberation, requires them to ensure impartiality and objectivity in their decisions."

"We can’t provide a comment on the specific appointment process for any one Board member."

Facebook said the board's decisions would be handed down by panels of five from the 20 members and that Ms Karman and the others would not be making individual rulings. "No single Board Member has a particular remit. No single Member of the Oversight Board was chosen to be a representative of any one country or specific community," it said. "No one Member holds unilateral power as an individual on the Oversight Board or can make content moderation decisions alone."

On her own Facebook account Ms Karman has written describing the Muslim Brotherhood as a "freedom-fighting movement" and accused the administration of Donald Trump of supporting terrorism in the region. After she was awarded the Nobel Peace Prize, the Yemeni Coalition for Civil Society Organisations described Ms Karman as a "war-monger not a peace messenger".

The oversight announcement sparked a boycott of Facebook by users around the region and fuelled allegations of bias in selection among US-based commentators.

The Counter Extremism Project, which has been a leading voice on Facebook's systemic failure to remove harmful material and propaganda from its platform, has been sharply critical of the appointments. It said the composition of the board and its structures does not mark a departure from the "delay, deny and deflect" approach long taken by the company.

"Beyond the obvious issues of why did it take two years to form this Board, and can a Board of 40 part-time people really manage the scale and complexity of managing 2.3 billions users, I don't believe that this Oversight Board is addressing the core issue with Facebook," said Hany Farid, professor at the University of California Berkeley and a Senior Advisor to the Counter Extremism Project.

"The main issue is not one of free expression, and which posts should stay up and which posts should be taken down. The issue, rather, is one of algorithmic amplification - how Facebook uses algorithms to maximise engagement with micro-targeting, which in turn promotes the outrageous, conspiratorial, salacious, and blatant lies, whether they be in the form of posts, news, or paid advertising."