More than 30 former Facebook moderators in Ireland, Spain and Germany are suing the social media company and four of its third party outsourcing agents after suffering psychological damage from viewing graphic content.

From terrorist beheadings to mass shootings, Facebook’s content moderators view up to 1,000 extreme images on every shift.

Employed by recruitment agencies on behalf of Facebook, the former employees claim they were given inadequate training to deal with the disturbing content and "no support" to deal with the mental trauma.

In the claims being lodged with Ireland's high court, the plaintiffs say they have suffered post-traumatic stress disordered as a result of the job.

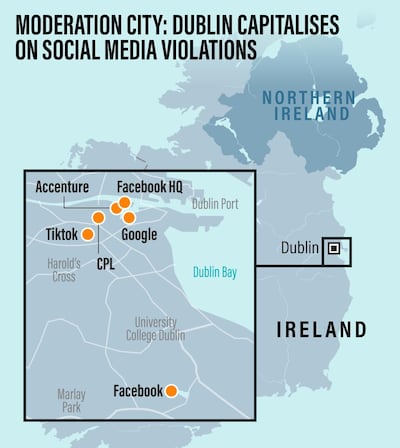

The people involved in the claims were employed in Dublin, Ireland, by CPL and Accenture, in Spain by CCC, and in Germany by Majorel.

Solicitor Diane Treanor of Coleman Legal Partners, who is representing the moderators, said each of her clients has suffered a form of PTSD.

"We have a multi-party action but each case will be judged on its own merit, many people have physical, serious and minor effects and they will be assessed on how they are," she told The National.

“They all worked at Facebook for various periods, from a year to three years. Most got traumatised quite quickly, the trend seems to be after nine to 15 months.

“There have been different symptoms, from panic attacks, anxiety, each one is different, some have recurring nightmares, hypersensitivity and depression.

“Each client was working for a different market depending on their languages, they were in different positions. Some people might speak Arabic and are prone to analysing a certain market and had their own content to review, such as beheadings.

“They have all been assessed by psychiatrists who have determined how they have suffered PTSD and they have assessed that those who were suffering from PTSD were not given any assistance. Under the Work Safety and Health Act in Ireland the companies are in breach of it.”

The claims are being lodged in Ireland because Dublin is the headquarters of Facebook in Europe.

The first claim was lodged against Facebook and CPL a year ago by former Dublin employee Chris Gray.

Six months after starting his employment he realised his mental health was beginning to suffer.

He told The National he had to watch child deaths and terrorist incidents, and the psychological scars from the content he viewed remain with him to this day.

“I never realised at the time how much the horrific things I had seen had affected me,” he said.

“I was becoming very hardened to what I was seeing and I could see my mood was changing. I just soldiered on, I didn’t realise that myself and others were being harmed by it.

“I’m just really angry that this has been done to us. If they had just acknowledged and managed the problem and put systems in place I could have continued to do the work.

“But we weren’t allowed to talk to anyone and treated like this problem did not exist.”

Ms Treanor is hoping his case will be set down for trial later this year.

“We are now waiting for the defendants to furnish their defence, they are wanting some documents from us and once we have that we will be looking at setting a high court trial date,” she said.

“There is a backlog in the courts here due to Covid-19, so the trials will take time. If successful our clients can expect thousands in damages.

“We cannot force Facebook to change their work ethics or policies on how they deal with this but if they do not address it there will be a continual flow of plaintiffs. They have a duty of care under the Health and Safety Act.”

In a landmark judgment in the US last May, Facebook agreed to pay $52 million to 11,250 current and former moderators to compensate them for mental health issues developed on the job.

It was launched in September 2018 by former Facebook moderator Selena Scola who claims she developed PTSD after being placed in a role that required her to regularly view photos and images of rape, murder and suicide.

Eight ways Facebook can address moderator trauma

Six months ago, Dr Paul Barrett, from New York University's Stern Centre for Business and Human Rights, said that Facebook needed to take moderation in-house owing to the psychological trauma suffered by staff.

In his report he made eight urgent recommendations, including stopping outsourcing of content moderation, raising moderators’ station in the workplace, doubling their number to improve the quality of the content reviewed, providing them with access to psychiatrists and sponsoring research into the health risks of content moderation, in particular PTSD.

“If we want to improve how moderation is carried out, Facebook needs to bring content moderators in-house, make them full employees, and double their numbers,” he said.

“Content moderation is not like other outsourced functions, like cooking or cleaning, it is a central function of the business of social media, and that makes it somewhat strange that it’s treated as if it’s peripheral or someone else’s problem.”

In response to the report, Facebook vowed to continue reviewing its working practices.

"The teams who review content make our platforms safer and we're grateful for the important work that they do," a Facebook representative told The National.

“Our safety and security efforts are never finished, so we’re always working to do better and to provide more support for content reviewers around the world.”

Facebook says it offers extensive support to its moderators and is committed to supporting them in the difficult role.

“We are committed to providing support for those that review content for Facebook as we recognise that reviewing certain types of content can sometimes be difficult,” the representative said.

“Everyone who reviews content for Facebook goes through an in-depth, multi-week training programme on our Community Standards and has access to extensive psychological support to ensure their well-being. This includes 24/7 on-site support with trained practitioners, an on-call service, and access to private health care from the first day of employment.

“We are also employing technical solutions to limit their exposure to graphic material as much as possible. This is an important issue, and we are committed to getting this right.”

Facebook employs more than 15,000 moderators through outsourcing companies at more than 20 sites worldwide.

Many of its European moderators are based in Ireland, in Sandyford in Dublin.

The site is also home to moderators for YouTube and other tech companies.

TikTok recently joined the social media city but employs only in-house moderators. In January, Google also announced a move to the site.

Outsourcing firm CPL is a major employer of moderators for Facebook and would not comment on the legal case.

"The health, safety and well-being of our employees is our top priority, and we have many measures in place to ensure employee well-being, including unrestricted access to counselling services as well as a 24/7 on-call service," a CPL representative said.

“We operate a professional, safe and rewarding work environment and are very proud of the great work carried out by our team.”

Accenture told The National it provides "proactive, confidential and on-demand counselling that is backed by a strong employee assistance programme".

“We continually review, benchmark and invest in our well-being programmes to create a supportive workplace environment,” it said.

CCC and Majorel have not responded to The National's requests for comment.