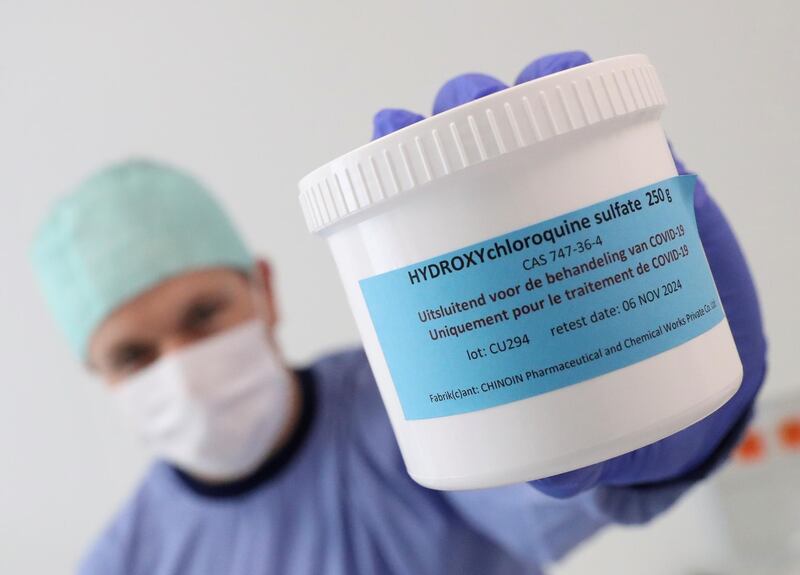

Remember that antimalarial treatment US President Trump said should be "put in use immediately" against Covid-19 back in March? He took a lot of flak for backing hydroxychloroquine, but now a new study of almost 3,500 patients in Italian hospitals has found it cuts death rates by 30 per cent.

Not so fast: in the past few days another study, this time involving about 12,000 patients given the drug, known colloquially as HCQ, concluded it does not save lives at all – and may actually be harmful. So who is right?

Welcome to the baffling world of evidence-based medicine, where science seems incapable of reaching definitive answers.

It is not only with Covid-19 that the evidence seems to forever flip-flop. In recent weeks, researchers have claimed that statins may really be useless for controlling cholesterol, and that pregnant women face a far higher risk from caffeine than currently believed.

Hardly a week goes by without some equally headline-grabbing study turning accepted wisdom on its head. Yet while they often appear in prestigious journals after supposedly being checked by experts, the truth is they are not equally reliable. This is not because the researchers are making stuff up (though that can and has happened).

Most often, the reason is the study or the interpretation of its findings – and sometimes both – is not fit for purpose.

Again, this does not (necessarily) mean the researchers are incompetent. So what are the telltale signs a study is likely to prove reliable?

What is the most reliable type of study?

When investigating “what works”, the gold standard is a double-blinded Randomised Controlled Trial, or RCT. Put simply, these compare two groups of patients, randomly allocated to receive either the treatment under test, or an alternative “control” treatment, which is often an inactive placebo.

The randomisation cuts the chances of anything other than the treatment affecting the outcome, as both groups are equally likely to get their share of patients who fare better or worse from other causes.

Both the researchers and the patient are also “blinded” to who is getting what, to eliminate any bias – unconscious or deliberate – in interpreting the outcome.

So why aren’t RCTs always used?

Decades of research have shown that most treatments do not work with most patients. As a result, an RCT will often need many hundreds or even thousands of patients to reveal the effectiveness of a treatment with enough precision to tell if it’s worth using. This makes RCTs time-consuming and very expensive – and often limits their size.

UAE schools to close if just two coronavirus cases are reported

An alternative is to run a so-called observational study, where researchers just compare those given the treatment with those who weren’t – while trying to account for any effects not caused by the treatment by analysing patient data. This was the approach used by researchers in Italy who claimed HCQ is effective against Covid-19.

What is wrong with observational studies?

Lacking randomisation and blinding, observational studies can fall prey to various biases, including unknown “confounders” that aren’t balanced out between the two groups of patients.

The Italian study on HCQ was also retrospective, meaning the researchers could not always find important information such as what dosage patients received, or whether other drugs were also used. So while the sheer number of patients looks impressive, the study’s headline claim was treated with scepticism by many researchers.

How about combining lots of small trials?

While the most reliable evidence comes from large RCTs, it’s possible to combine many small studies in a “meta-analysis”. This is what an international team has now done for HCQ, examining 28 studies of patients who received the drug.

As most of the studies were observational, the team carried out a “systematic review” to weed out studies at high risk of bias. They then used statistical methods to combine the outcome of those remaining, including three RCTs. The results – based on more than 12,000 HCQ patients – were revealing.

The observational studies collectively pointed to a possible benefit from HCQ, but with a lot of uncertainty. In contrast, the three RCTs all pointed to HCQ being at best useless – and most probably harmful.

Given the greater reliability of RCTs, most researchers – and the World Health Organisation – have concluded HCQ should be abandoned.

So what about statins and cholesterol?

More than 200 million people worldwide take statins to protect against heart attacks and strokes. So last month's publication of a study claiming statins do not work inevitably made headlines.

On the face of it, the evidence seems impressive. The researchers based their conclusion on a systematic review of 35 studies – all of them RCTs – involving tens of thousands of patients.

However, the researchers didn't perform a meta-analysis, claiming the RCTs covered too many different types of patient and statin. Instead, they simply totted up how many of the RCTs gave statistically non-significant findings.

Crucially, this does not mean the trials had shown statins do not work – but merely that they have failed to give convincing evidence either way. Statisticians have warned researchers about making this blunder for decades.

Combined with other, more technical, errors, the statins study serves chiefly to show that publication in a “serious” journal is no guarantee of reliability.

What about caffeine and pregnant mums?

Mothers-to-be have long been advised to cut back on caffeine intake, but according to a new review of the evidence, the only safe amount is zero.

Again, the evidence seems impressive, being based on dozens of studies. Yet all but one were observational and thus subject to biases, making it impossible to claim caffeine is responsible for any ill-effects.

Importantly, the review was also not systematic – meaning the author may have missed studies that could overturn the overall conclusion.

The upshot for pregnant mums: nothing to see here – and don’t worry about having an occasional cup of coffee.

Robert Matthews is visiting professor of science at Aston University, Birmingham, UK