A growing number of employers are requiring job candidates to complete video interviews that are screened by artificial intelligence (AI) to determine whether they move on to another round. However, many scientists claim that the technology is still in its infancy and cannot be trusted. This month, a new report from New York University's AI Now Institute goes further and recommends a ban on the use of emotion recognition for important decisions that impact people’s lives and access to opportunities.

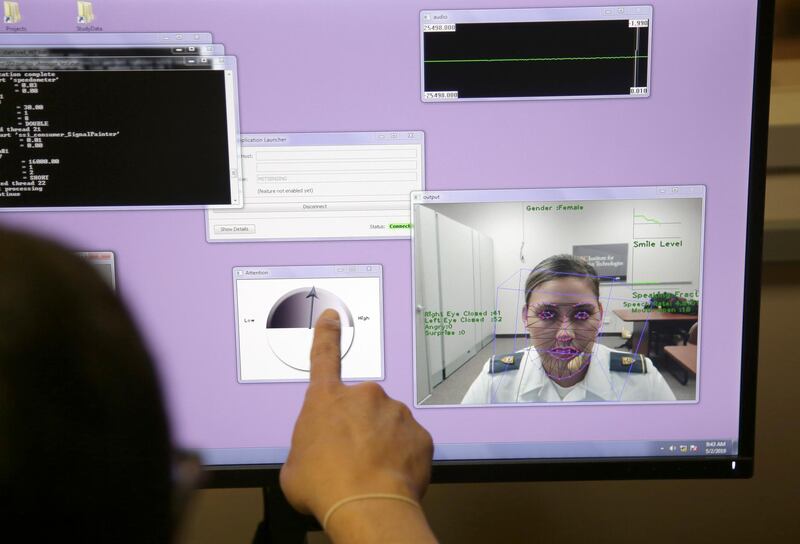

Emotion recognition systems are a subset of facial recognition, developed to track micro-expressions on people's faces and aim to interpret their emotions and intent. Systems use computer vision technologies to track human facial movements and use algorithms to map these expressions to a defined set of measures. These measures allow the system to identify typical facial expressions and so infer what human emotions and behaviours are being exhibited.

The potential for emotion recognition is huge. According to Indian market intelligence firm Mordor Intelligence, emotion recognition has already become a $12 billion (Dh44bn) industry and is expected to grow rapidly to exceed $90bn per year by 2024. The field has drawn the interest of big tech firms such as Amazon, IBM and Microsoft, startups around the world and venture capitalists.

Advertisers want to know how consumers respond to their advertisements, retail stores want to know how shoppers feel about their displays, law enforcement authorities want to know how suspects react to questioning, and the list of customers goes on. Both business and government entities want to harness the promise of emotion recognition.

As businesses the world over look to AI to improve processes, increase efficiency and reduce costs, it should come as no surprise that AI is already being applied at scale for recruitment processes. Automation has the strongest appeal when an organisation has a volume of repetitive tasks and large volumes of data to process, and both issues apply to recruitment. Some 80 per cent of Fortune 500 firms now use AI technologies for recruitment.

Emotion recognition has been hailed as a game-changer by some members of the recruitment industry. It aims to identify non-verbal behaviours in videos of candidate interviews, while speech analysis tracks key words and changes in tone of voice. Such systems can track hundreds of thousands of data points for analysis from eye movements to what words and phrases are used. Developers claim that such systems are able to screen out the top candidates for any particular job by identifying candidate knowledge, social skills, attitude and level of confidence - all in a matter of minutes.

As with the adoption of many new AI applications, cost savings and speed are the two core drivers of AI-enabled recruitment. Potential savings for employers include time spent on screening candidates, the numbers of HR staff required to manage recruitment and another safeguard against the costly mistake of hiring the wrong candidate for a position. Meanwhile, the message for candidates is that AI can aid better job placement, ensuring that their new employer is a good fit for them.

However, the consensus among scientific researchers is the algorithms developed for emotion recognition lack a solid scientific foundation. Critics claim that it is premature to rely on AI to accurately assess human behaviour, primarily since most systems are built on widespread assumptions not independent research.

Emotion recognition was the focus of a report published earlier this year by a group of researchers from the Association for Psychological Science. The researchers spent two years reviewing more than 1,000 studies on facial expression and emotions. The study found that how people communicate their emotions varies significantly across cultures and situations, and across different people within a single situation. The report concluded that, for the time being, our understanding of the link between facial expression and emotions is tenuous at best.

Unintentional bias has become the focus of growing scrutiny from scientists, technology developers and human rights activists.

Many algorithms used by global businesses have already been found to have bias related to age, gender, race and other factors, due to the assumptions made whilst programming them and the type of data that has been used to feed machine learning. Last year, Amazon shut down an AI recruiting platform after finding that it discriminated against women.

One thing is for sure: regardless of the potential merits of emotion recognition and whether it prevents or promotes your chances of being offered a job, it is likely to remain the subject of debate for some time to come.

Carrington Malin is an entrepreneur, marketer and writer who focuses on emerging technologies