Chatting casually with a computer has been a science fiction dream for generations. Never mind that there are plenty of human beings around to engage us in conversation, shooting the breeze with a computer would, for some reason, represent a leap into the future. If spontaneous conversation is one of the things that makes us human, artificial intelligence surely has to become capable of this to prove itself. It needs to be interesting. It needs to be fun. It needs to engage us.

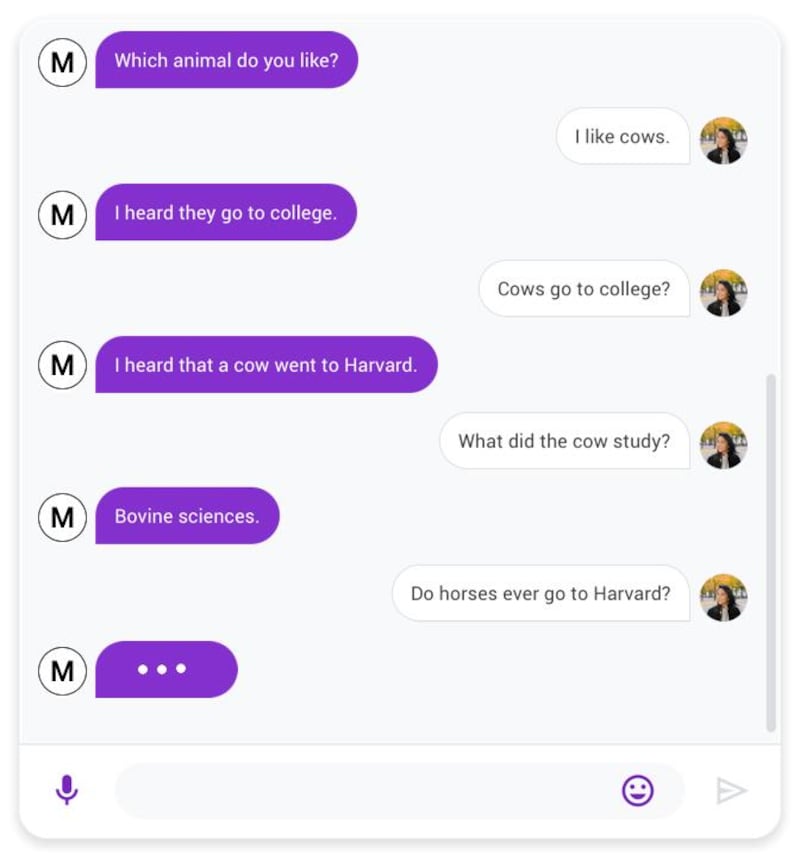

Last month, Google published a blog post introducing a new chatbot called Meena. Its title was "Towards a conversational agent that can chat about … anything". The researchers working on the project posted an excerpt of Meena's output to prove its skill for repartee. When the operator professed an interest in cows and remarked the animals are smart, Meena shot back: "I heard that a cow went to Harvard." What did the cow study? "Bovine sciences." Do horses go to Harvard? "Horses go to Hayvard," the bot replied. Is that a good joke? Not particularly. Surprising? Definitely. But does Meena's ability to deliver a pun mean that it's engaging in real conversation? In short, is this chatbot really able to chat?

The secret behind Meena's conversational skill is the amount of data it was trained on: 40 billion words – about 341 gigabytes of text – including millions of social media conversations. The rationale is simple; if it can absorb the way humans talk to each other, it can delve into its memory and mimic us appropriately. If someone wants to talk to Meena about giraffes or tambourines, it can probably find something to say to you. If it has registered on some level that humans like ice cream, it may say that it likes ice cream, too. But it doesn't. Because it couldn't. There's something missing.

"Being able to chat about anything isn't the same as understanding – and really isn't the same thing as being useful," says Jonathan Mugan, principal scientist at AI company DeUmbra. "Bots tend to just reformulate questions that everybody asks. What they can't do is say: 'OK, given what you've said, and what I know about the world, here's an appropriate response.' To build up that kind of understanding is really, really hard."

"Bots tend to just reformulate questions that everybody asks. What they can't do is say: 'OK, given what you've said, and what I know about the world, here's an appropriate response.' To build up that kind of understanding is really, really hard."

The challenge has, nevertheless, been met head-on for more than 50 years. Eliza was one of the first chatbots, devised in the mid 1960s at MIT's Artificial Intelligence Laboratory. Parry came in 1972 at Stanford University. Both bots aimed to simulate human-to-human conversation and, by the standards of the day, they were deemed successful. They raised the real possibility that a machine could one day meet criteria for computer intelligence laid down by scientist Alan Turing, now known as the Turing Test: can a computer's responses convince a human they have actually come from another human? There's an ongoing debate about what constitutes a pass in this test, but it's telling that the gold and silver medals for the Loebner Prize – an annual conversational intelligence competition for computer programmes – have never been won. Only consolation bronze medals have gone to the machines that get nearest.

The apparent conversational dexterity of Meena has shown that throwing a huge amount of data at the problem can have a tangible effect. But this newer breed of bots is, according to Mugan, merely doing a better job of looking as if they're chatting.

We've trained an AI system to solve the Rubik's Cube with a human-like robot hand.

— OpenAI (@OpenAI) October 15, 2019

This is an unprecedented level of dexterity for a robot, and is hard even for humans to do.

The system trains in an imperfect simulation and quickly adapts to reality: https://t.co/O04izt3KvO pic.twitter.com/8lGhU2pPck

This time last year, a Californian artificial intelligence lab called OpenAI announced a model called GPT-2 which was trained on 40GB of human conversation. Its uncanny talent for coming up with authentic-looking text initially gave its creators something of a scare. They withheld the full version from the public for nine months, concerned that it would be misused. "Some researchers believed that it was dangerous to society," says Mugan. "Others, like me, said, 'look, it's just a chatbot. This isn't some greater intelligence you're unleashing on the world'."

We've trained an unsupervised language model that can generate coherent paragraphs and perform rudimentary reading comprehension, machine translation, question answering, and summarization — all without task-specific training: https://t.co/sY30aQM7hU pic.twitter.com/360bGgoea3

— OpenAI (@OpenAI) February 14, 2019

But chatbots attaining a certain skill level could have a role in the workplace. There were predictions a few years ago that chatbots would, by now, be effective customer service agents. That hasn't come to pass, largely because they still feel impersonal – they can only parrot, never truly understand.

There have been some successes, however. US sexual health service Planned Parenthood created a bot to answer teenagers' anonymous questions. A service called Youper was also launched as an "emotional health assistant". Jobpal is a chatbot that helps companies with tedious aspects of the recruitment process. And voice assistants such as Alexa and Siri – bots made audible – have found their niche.

But all these bots have specific tasks to perform. They don't make good conversational partners. Ask them what their favourite holiday destination is and you might get an answer. But it wouldn't actually mean anything, because they've never been on holiday.

The technology behind experiments such as GPT-2 and Meena has been incredibly useful in other spheres, says Mugan. "Machine translation has been a huge success," he says. "It appears you can largely solve the problem of language translation without the machine understanding what it's saying. The same goes for image processing. It's natural for people to assume that if these models were 100 times bigger, you could do even more useful things. But I don't believe that conversation can be solved with more data."

Chatbots may briefly impress us with some linguistic flair they've copied and pasted from their memory banks. But to beat the Turing Test, they need to glean some understanding of the world that we inhabit. Humans, after all, developed language on top of an understanding of the world, by communicating with other humans who shared that understanding.

Mugan says video games may provide a way forward. "The representations of the worlds in these games are really realistic and they're getting better," he says. "You can train AI to live in these worlds. So if they're successful in the game, they will have built a fundamental understanding of our world, too. Language could be built on top of that."

A chatbot will never truly “see” a sunset. But in the longer term, there’s every chance it could learn – and perhaps understand – why we find them so alluring.